Tag: <span>artificial intelligence</span>

AI and Machine Learning

Artificial intelligence (AI), including machine learning (ML) and deep-learning techniques (DL), is poised to become a transformational force in healthcare. The various stakeholders in the ecosystem all stand to benefit from ML-driven tools. From anatomical geometric measurements to cancer detection, radiology, surgery, drug discovery and genomics, the possibilities are endless. ML can lead to increased operational efficiencies, extremely positive outcomes and significant cost reduction.

Opportunities for machine learning in healthcare

There is a broad spectrum of ways that ML can be used to solve critical healthcare problems. For example, digital pathology, radiology, dermatology, vascular diagnostics and ophthalmology all use standard image-processing techniques.

Chest X-rays are the most common radiological procedure, with over two billion scans performed worldwide every year. Amounting to 548,000 scans a day. Such a huge quantity of scans imposes a heavy load on radiologists and taxes the efficiency of the workflow. Methods involving ML, deep neural networks (DNN) and convolutional neural networks (CNN) often outperform radiologists in speed and accuracy. Although the expertise of a radiologist is still of paramount importance. However, under stressful conditions during a fast decision-making process, human error rate could be as high as 30 %. Aiding the decision-making process with ML methods can improve the quality of the result. Furthermore, it can also provide radiologists and other specialists as an additional tool.

Machine learning on the test bench

Validations of ML are today coming from multiple and very reliable sources. In a study by Stanford ML Group, a 121-layer CNN was trained to detect pneumonia better than four radiologists. In multiple other studies by the National Institute of Health, attempts at early detection, using a DNN-model, achieved better accuracy. Even better than multiple radiologists’ diagnoses at the same time – from malignant pulmonary nodules to diagnosing lung-cancer.

Many procedures within radiology, pathology, dermatology, vascular diagnostics and ophthalmology could involve large image sizes requiring complex image processing. Also, the ML workflow can be computing- and memory-intensive. The predominant computation is linear algebra and demands many computations and a multitude of parameters.

This results in billions of multiply-accumulate (MAC) operations, hundreds of megabytes of parameter data. Moreover, it requires a multitude of operators and a highly-distributed memory subsystem. So, performing accurate image-inferences efficiently for tissue detection or classification using traditional computational methods on PCs and GPUs is inefficient. Accordingly, healthcare companies are looking for alternative techniques to address this problem.

Improved efficiency with ACAP devices

Xilinx technology offers a heterogeneous and highly distributed architecture to solve this problem for healthcare companies. The Xilinx Versal Adaptive Compute Acceleration Platform (ACAP) family of system-on-chips (SoCs). This SoCs featuring adaptable field-programmable gate arrays (FPGAs), integrated digital-signal processors (DSPs) and accelerators for deep learning. Additionally, SIMD VLIW engines with highly distributed local memory architectures and multi-processor systems, is known for its ability to perform massively. Parallel signal processing of high-speed data in close to real time.

Versal ACAP has multi-terabit-per-second Network-on-Chip (NoC) interconnect capability and an advanced AI-Engine containing hundreds of tightly integrated VLIW SIMD processors. This means computing capacity can be moved beyond 100 tera operations per second (TOPS).

These device capabilities dramatically improve the efficiency of how complex healthcare ML algorithms are solved. And help to significantly accelerate healthcare applications at the edge, all with fewer resources, less cost and power. With Versal ACAP devices, support for recurrent networks could be inherent due to the simple nature of the architecture. And its supporting libraries.

Xilinx has an innovative ecosystem for algorithm and application developers. Unified software platforms mean developers can use advanced devices – such as ACAPs – in their projects. Such as Vitis for application development and Vitis AI for optimising and deploying accelerated ML inference.

Healthcare and medical-device workflows are undergoing major changes. In the future, medical-workflows will be “big data” enterprises with significantly higher requirements for computational needs, security, and patient safety. Distributed, non-linear, parallel and heterogeneous computing platforms are key for solving and managing this complexity. Xilinx devices like Versal and the Vitis software platform are ideal for delivering the optimised AI architectures of the future.

Discover more about Xilinx: www.xilinx.com.

Progress in technology 2021

Humanity has made phenomenal progress thanks to technology. There are more researchers and developers today than ever before. With their passion for technology, they toil to create solutions for the challenges we will face in the future.

The market for AI is growing rapidly

The predicted average annual growth rate of the global market for artificial intelligence is 46.2 per cent. By 2025, the market volume is estimated to be USD 390.9 billion.

(Source: Research and Markets)

AI’s performance increses

In a year and a half, the time required to train a large image-classification system on cloud infrastructure has fallen from about three hours in October 2017 to about 88 seconds in July 2019. During the same period, the cost of training such a system has fallen similarly.

(Source: Stanford University, “The AI Index 2019 Annual Report”)

Masses of semiconductors

2018 – the year in which more than 1 trillion semiconductors were sold for the first time.

(Source: Semiconductor Industry Association, SIA)

The number of people without access to electricity is decreasing

In the year 2010 there were 1.2 billion people worldwide without access to electricity, however, the number dropped to 789 million in 2018. Because renewable energy solutions have played a crucial role in growing the number of people with access to electricity. In 2018, more than 136 million people had a basic off-grid supply of electricity from renewable energy.

(Source: International Renewable Energy Agency, IRENA)

Jobs in renewables

42 million jobs in 2050: Jobs in renewables will reach 42 million globally by 2050, four times their current level, through the increased focus of investment on renewables. Energy-efficiency measures would create 21 million additional jobs, and system flexibility another 15 million.

(Source: International Renewable Energy Agency, IRENA)

Research is ongoing

Over USD 1.8 trillion: Investment in research and development in the ten leading countries in 2019.

(Source: Statista)

Internet users wordwide

Today 4.1 billion people use the Internet. That amounts to 53.6 per cent of the world’s population. In 2005, this figure still stood at per cent.

(Source: International Telecommunication Union)

People’s health continues to improve

Life expectancy at birth increased worldwide from 46 years in 1950 to 67 years in 2010 and, most recently, to 73.2 years in 2020.

(Sources: The Millennium Project, UN)

Interview with Dr Simon Haddadin, CEO of Franka Emika

Interview with Dr Simon Haddadin, CEO of robotics manufacturer Franka Emika

Many innovative technologies like artificial intelligence or machine learning end up on a screen, as Dr Simon Haddadin explains. But he and his team at Franka Emika, develop robots that get technology off the drawing board and into the thick of things in the real world. In Haddadin’s words, this not only has an “impact” in a technical sense, but also in social, societal and economic terms.

Despite qualifying as a medical practitioner, he found this new field extremely exciting. Therefore he hung up his stethoscope in 2016 to found Franka Emika together with his brother Sami. They were hoping that the company’s robots would make a difference in the world. Since starting production in 2018, Franka Emika has sold around 3,000 robots for all kinds of applications.

Although Sami Haddadin is the expert when it comes to algorithms and artificial intelligence, his brother Simon was able to bring something else to the table with his medical expertise. Because developing robots at Franka Emika is really about understanding human capabilities and transferring them to technology.

Simon Haddadin demonstrated one particularly impressive example of their robots’ capabilities. The SR-NOCS (Swab Robot for Naso- and Oropharyngeal COVID-19 Screening) conducts high-precision, fully autonomous nose and throat swabs on humans to test for COVID-19. The system has already shown off its capabilities in practical settings and been ordered by surgeries. Yet it took one or two sleepless nights and a healthy dose of passion to get this far, as Dr Haddadin emphasises.

Is the SR-NOCS a typical example of your company’s products?

Dr Simon Haddadin: No, it actually isn’t. We view our robotics solution as a hardware and software platform, similar to Apple with its iPhone and App Store. In other words, other parties can take our platform and launch their own completely new solutions based on our system. We were own our customers in a sense when it came to the SR-NOCS – we not only supplied the platform, but also a system solution for a specific application.

Apart from this platform concept, what is so special about your robots?

S.H.: We founded the company with the vision of opening robotics up to anyone and everyone, which is why we gave our robots new abilities. First and foremost among these is a sense of touch, which was realised by equipping each robot with over 100 sensors and lending it a sense of mechanical flexibility rather than rigidity. It can contract and relax muscles the same way that a human can. This enables our robots to work at close quarters with humans without any barriers or other safety guards.

Finally, our robots are as easy to operate as a smartphone. And they also come at just the fraction of the cost of previous models destined for industrial applications.

With your background in medicine, how did you end up managing a robotics company?

S.H.: My brother and I founded the company together. I actually used to be the one with an affinity for technology and would build and program computers at home. My brother, on the other hand, wanted to be a marine biologist. However, neither of us gave enough thought to our respective futures. In the end, it was our mother who enrolled us on our courses. Electrical and computer engineering for my brother, and medicine for me.

In other words, the exact opposite of your interests…

S.H.: That’s true.

My brother went on to develop an algorithm that gave robots a sense of touch, but nobody believed it would work. I told him back then that you have to back everything up with statistics in medicine. That was around ten years ago at Christmas. Instead of eating dinner together on Christmas Day, we went to the lab at the German Aerospace Center, where he was working at the time, and conducted crash tests. We wanted to establish where the boundary was between danger and safety for humans. This also gave rise to my thesis in the field of biomechanics.

At the time, we both saw how it was possible to conduct a lot of research without actually setting foot in the real world. That was the motivation for us to found our own company.

What is it that fascinates you about robotics?

S.H.: The impact that I have at Franka Emika is entirely different to being just a drop in the ocean as a doctor. In medicine, you learn a great deal about what people are made of. But only a little about how they actually work. This is different in advanced robotics as we understand it. For me, the most exciting thing is that you have to acquire an understanding of human abilities and then put them into a machine. And, of course, it’s really not hard to get excited about all the possibilities that this opens up.

Ultimately, many other cutting-edge technologies like machine learning or artificial intelligence end up on a screen. However, the most important human trait is the ability to interact with the real world, even in total chaos: a person has no idea what is heading their way, yet they can still get their bearings and interact with their surroundings. For me, this intervention in the real world is what makes robotics so exciting. Our robots should help people to put their abilities to good use even more simply and effectively.

You’ve mentioned “impact” – what exactly do you mean by that?

S.H.: This can essentially be divided into two points. Firstly, I am fortunate enough to have an extremely ambitious and talented team here. This team enables us to bring a project like SR-NOCS to fruition within a very tight turnaround time. Innovation is our most valuable asset, and we give our colleagues a very long leash. In this way, they can actually put their ideas into practice. This is what makes our innovations and technological breakthroughs possible in the first place.

The second point concerns real-world applications: our systems are primarily used in the “3C” industry: computers, communication devices and consumer electronics. Yet the year before last saw the closure of the last computer factory in Europe. Although we want to bring about a digital revolution in Europe, we can’t manufacture a single computer here… This is a consequence of the different standards that apply in countries half the world away: after seeing conditions in factories there, you have to admit that they come pretty close to modern-day slavery. This is the only way our smartphones can be as cheap as they are.

Therefore, one “impact” is the fact that we can banish working conditions like this with our robots.

The other impact is that we want to help Europe achieve economic and technological independence again. Nowadays, we are merely consumers of information technology and have ceased to be suppliers. Our vision is to make manufacturing in such fields economically viable in Europe once more. For example, our own production facilities are located in the Allgäu region of Germany, where our robots are manufactured by other robots for the most part. This allows us to manufacture economically right on our doorstep.

Another aim is to make mechatronic systems like autonomous driving or even autonomous flying possible in the long term – we still boast a lot of expertise in these fields in Europe. If at all possible, we want to ensure that these industries don’t go the way of the IT sector, with production for such technologies migrating away from Europe.

We try to do “our bit” to prevent this.

But your motto is “robotics for everyone” – so not just for factories?

S.H.: That’s correct, although we need industry to achieve economies of scale. You first need a market in which you can sell a certain number of units in order to bring prices down further. Although we’ve already made a quantum leap in terms of the cost of robots, they are still too expensive for private use. We therefore need to increase the scale further to reduce costs. In doing so, we can reach a cost range that would also be acceptable for household appliances. This is what the goal must be.

It’s important that robots are not perceived as toys, but as home assistants. Of course, that’s still a few years off, but we are already laying a lot of foundations in development. This not only involves our stationary robots. We are also building service robots already; essentially mobile robotic assistants. Our aim is for people to use robots for assistance at home during the “third age” and “fourth age” of their lives, whether that be for loading the dishwasher, preparing meals or dispensing pills. Robots can also assume an important role as a means of communicating.

In the future, people will be able to communicate with each other haptically using robots, as opposed to just by telephone or videoconferencing. The third area of application is medical assistance: in other words, robots might remind someone to take their pills, perform simple tests like taking a temperature or measuring blood pressure and, if necessary, call for an ambulance.

When do you think that such systems will be widely available?

S.H.: In certain applications, they already are. I estimate that it will be five to ten years before this type of thing is widely available.

I think that industrial applications are absolutely essential, although of course I hope that robots will be used much more in everyday situations at some point. For this to be a reality, however, a few things still need to happen in terms of the technology. Appropriate regulations also need to be amended, such as those concerning how people deal with these types of “learning” systems in their day-to-day lives.

So which aspects of the technology need to be refined?

S.H.: For one thing, there is still some work to be done on how machines communicate among themselves. We don’t think that today’s Internet is suitable for this. It’s too centralised – we really need a new type of network, ideally a decentralised one. There is also still a need for development in terms of real-time applications. For machines, “real time” actually means 1,000 signals per second – a figure more than a thousand times higher than the definition of the same term in an IT or Internet context. Many systems are simply not designed for this. In addition, hardware production needs to keep being scaled up in order for the components to get even cheaper.

Robotics is just one of many trending technologies at the moment, however. Which of the “disruptive” technologies like IoT, AI or edge computing will change our society the most?

S.H.: It’s not easy for me to deliver an impartial verdict here – that goes without saying. The great thing about robotics is that it transcends many kinds of technology. It’s actually about taking all of the technologies you just mentioned and merging them. In doing so, you can bring many of these on-trend technologies into the real world.

You have technology on the one hand. But you also need people who have a certain passion for technology. Yet you might get the impression that young people today tend to eschew new technologies. As a young company, what is your own experience of this?

S.H.: Young people today are – thankfully – preoccupied with extremely relevant topics like climate change. That much is a given. However, at the end of the day, I really do think that technology can help us in many ways to solve the major problems that our society faces.

That’s why we want to show young people what kind of doors technology can open. In 2017, we were awarded the Deutscher Zukunftspreis, which came with an endowment of EUR 250,000. With that prize money, we established a foundation that aims to introduce children and young people to technology as early as possible.

We are also a patron of the Munich round of the “Jugend forscht” youth science competition. In all of these activities, we see that there are still plenty of young people who are interested in technology and who also see the kind of difference it can make.

How important is passion when it comes to developing and using new technologies? Is passion absolutely essential, or might it even be a hindrance under certain circumstances?

S.H.: It’s probably a mixture of the two… Passion makes you keep plugging away in the face of all adversity. If you do new things, you will inevitably face a lot of opposition. This can take the form of competitors, who clearly have no desire for new kids on the block to appear. Then there are regulatory matters – in many senses, the world just isn’t ready for new things.

Naturally, the development of the technology itself is sometimes also difficult and drawn-out. Because all too often, this means long nights and short days when you don’t see your family much. Without a certain passion, it is impossible for someone to defy circumstances like these in the longer term.

„Passion ensures that you carry on despite all the setbacks.“

Dr Simon Haddadin, CEO and Co-founder of Franka Emika

On the other hand, you sometimes need keep a cool head and not get too hung up on things. After all, after a certain point, you simply can’t sink your teeth into absolutely everything. You eventually need to maintain a certain distance to actually make some money. Were it not for passion, the towel might have been thrown in a long time ago.

SR-NOCS – a medical product with a light touch?

This is where Franka Emika’s robot scores highly with its refined sense of touch. It takes samples so carefully and safely that it has already been approved as a Class I medical product, allowing it to be used in hospitals.

To test a patient, the robot arm first extends a disinfected plastic support through an opening in the test station’s screen. The patient must then position their nose and mouth and confirm that they are ready for the sample to be taken by pressing a pedal. Only then will the robot extend a swab from the support into their nose and mouth, respectively. The robot packages the sample in a tube, removes the plastic support and disinfects the gripper arm – all fully automatically.

Patients tested in this way were impressed by the robotic solution and would be happy to be re-tested by the SR-NOCS robot again at any time.

Get more informations about the company Franka Emika: www.franka.de

What is AlfES?

The concurrent development and design of chips and software has enabled researchers to realise chips that are not only uncommonly small and energy-efficient. But also boast powerful AI capabilities extending right through to training. One possible application scenario might be in nano drones that can navigate their way through a room independently.

To the untrained eye, the chip does not look any different from the ones found in any ordinary electronic device. Yet the chips developed by the Massachusetts Institute of Technology (MIT) and named Eyeriss and Navion are a revelation. And might just be the key to the future of artificial intelligence. Using these chips, even the most diminutive IoT devices could be equipped with powerful “smart” abilities. The likes of which have only been able to be provided from gigantic data centres until now.

Energy efficiency is vital

Professor Vivienne Sze from the MIT Department of Electrical Engineering and Computer Science (EECS) – and a member of the development team – is keen to emphasise that the real opportunity offered by these chips is not in their impressive capability for deep learning. It is much more about their energy efficiency. The chips need to master computationally intensive algorithms and make do with the energy available on the IoT devices themselves. However, this is the only way that AI can find widespread application on the “edge”. The performance of the Eyeriss chip is 10 or even 1,000 times more efficient than current hardware.

A symbiosis of software and hardware

In Professor Sze’s lab, research is also underway to determine how software ought to be designed to fully harness the power of computer chips. A low-power chip called Navion was developed at MIT to answer this question. To name one potential application, this chip could be used to navigate a drone using 3D-maps with previously unthinkable efficiency. But above all, such a drone is minute and no larger than a bee.

The concurrent development of the AI software and hardware was a crucial in this instance. This enabled researchers to build a chip just 20 square millimetres in size in the form of Navion. This chip only requires 24 milliwatts of power. That is around a thousandth of the energy consumed by a light bulb. The chip can use this tiny amount of power to process up to 171 camera images per second. Additionally, it perform inertial measurements – all in real time. Using this data, it calculates its position in the room. It might also be conceivable for the chip to be incorporated into a small pill that could gather and evaluate data from inside the human body once swallowed.

The chip achieves this level of efficiency through a variety of measures. For one thing, it minimises the data volume – taking the form of camera images and inertial measurements. That is stored on the chip at any given point in time. The developer team was also able to physically reduce the bottleneck. Between the data’s storage location and the location in which they are analysed. Not to mentioned coming up with clever schemes for the data to be re-used. The way that these data flow through the chip has also been optimised. Certain computation steps are also skipped, such as the computation of zeros that result in a zero.

A basis for self-learning, miniaturised electronics

Research into how AI can be more effectively integrated into edge devices is also being conducted in other institutes. Accordingly, a team of researchers at the Fraunhofer Institute for Microelectronic Circuits and Systems (IMS) has developed a kind of artificial intelligence for microcontrollers and sensors. That comprises a fully configurable, artificial neural network.

This solution – called AIfES – is a platform-independent machine-learning library. Using which self-learning, miniaturised electronics that do not require any connection to cloud infrastructure or powerful computers can be realised. The library constitutes a fully configurable, artificial neural network. This network can also generate appropriately deep networks for deep learning if needed. The source code has been reduced to a minimum, meaning that the AI can even be trained on the microcontroller itself. Thus far, this training phase has only been possible in data centres. AIfES is not concerned with processing large data volumes. Instead, it is now much more a case of the strictly required data being transferred in order to set up very small neural networks.

What is AlfES?

The team of researchers has already produced several demonstrators, including one on a cheap 8-bit microcontroller for detecting hand-written numbers. An additional demonstrator can detect complex gestures and numbers written in the air. For this application, scientists at the IMS developed a detection system comprising a microcontroller and an absolute orientation sensor. To begin with, multiple people are required to write the numbers from zero to nine several times over. The neural network detects these written patterns, learns them and autonomously identifies them in the next step.

Studies conducted at the research institutes provide an outlook for how AI software and hardware will continue to develop symbiotically in the future and open up complex AI functions in IoT and edge devices in the process.

Edge Computing helps AI take off

Nowadays, edge devices can run AI applications thanks to increasingly powerful hardware. As such, there is no longer any need to transfer data to the cloud. Which makes AI applications in real time a reality, reduces costs and brings advantages in terms of data security.

Today, Artificial Intelligence (AI) technology is used in a wide range of applications. Where it opens up new possibilities for creating added value. AI is used to predictively analyse the behaviour of users on social networks. Which enables ads matching their needs or interests to be shown. Even facial- and voice-recognition features on smartphones would not work without artificial intelligence. In industrial applications, AI applications help make maintenance more effective by predicting machine failures before they even occur. According to a white paper by investment bank Bryan, Garnier & Co., 99 per cent of AI-related semiconductor hardware was still centralised in the cloud as recently as 2017.

The difference between training and inference

One of Artificial Intelligence’s most important functions is Machine Learning. With which IT systems can use existing data pools to detect algorithms, patterns and rules. In addition to coming up with solutions. Machine Learning is a two-stage process. In the training phase, the system is initially “taught” to identify patterns in a large data set. The training phase is a long-term task that requires a large amount of computing power. After this phase, the Machine-Learning system can apply the final, trained model to analyse and categorise new data. And finally to derive a result. This step – which is known as inference – requires much less computing power.

Cloud infrastructure cannot handle AI requirements alone

Most inference and training steps today are executed in the cloud. For example, in the case of a virtual assistant, the user’s command is sent to a data centre. Then analysed there with the appropriate algorithms and sent back to the device with the appropriate response. Until now, the cloud has remained the most efficient way to exploit the benefits of powerful, cutting-edge hardware and software. Yet the increasing number of AI applications is threatening to overload current cloud infrastructure.

For instance, if every Android device in the world were to execute a voice-recognition command every three minutes. Google would need to make twice as much computing power available as it currently does. “In other words, the world’s largest computing infrastructure would have to double in size,” explains Jem Davies, Vice President, Fellow and General Manager of the ARM Machine Learning Group. “Also, demands for seamless user experiences mean people won’t accept the latency inherent in performing ML processing in the cloud.”

The number of AI edge devices is set to boom

Inference tasks are increasingly being relocated to the edge as a result of this. Which enables the data to be processed there without even needing to be transferred anywhere else. “The act of transferring data is inherently costly. In business-critical use cases where latency and accuracy are key and constant connectivity is lacking, applications can’t be fulfilled. Locating AI inference processing at the edge also means that companies don’t have to share private or sensitive data with cloud providers. Something that is problematic in the healthcare and consumer sectors,” explains Jack Vernon, Industry Analyst at ABI Research.

According to market researchers at Tractica, this means that annual deliveries of edge devices with integrated AI will increase from 161.4 million in 2018 to 2.6 billion in 2025. Smartphones will account for the largest share of these unit quantities, followed by smart speakers and laptops.

Smartphones making strides

A good example for demonstrating the sheer variety of possible applications for AI in edge devices are smartphones. To name one example, AI enables the camera of the Huawei P smart 2019 to detect 22 different subject types and 500 scenarios. In order to optimise settings and take the perfect photo. In the Samsung Galaxy S10 5G, on the other hand, AI automatically adapts the battery, processor performance, memory usage and device temperature to the user’s behaviour.

Gartner also names a few other potential applications, including user authentication. In the future, smartphones might be able to record and learn about a user’s behaviour. Such as patterns when walking or when scrolling and typing on the touch screen. All this, without any passwords or active authentication being required.

Emotion sensors and affective computing make a lot possible for smartphones. To detect, analyse and process people’s emotional states and moods before reacting to them.

For instance, vehicle manufacturers might use the front camera of a smartphone to interpret a driver’s mental state or assess signs of fatigue. In order to improve safety. Voice control or augmented reality are other potential applications for AI in smartphones.

CK Lu, Research Director at Gartner is certain of one thing: “Future AI capabilities will allow smartphones to learn, plan and solve problems for users. This isn’t just about making the smartphone smarter, but augmenting people by reducing their cognitive load. However, AI capabilities on smartphones are still in very early stages.”

Edge solutions: Consumer sector

Edge solutions in the consumer sector are possible thanks to increasingly powerful embedded technologies. As a result, they make it possible to equip even everyday devices such as stoves or televisions with edge intelligence. This not only means greater ease of use but also leads to improved operation of the devices. Smart applications with local data processing can also provide added security in the home, for example. Or allow simple payments using a smartphone.

Smart devices with their own intelligence are increasingly also conquering the everyday world of the consumer, be it in the form of wearables, in home appliances or with assistance systems that make life simpler and safer for the elderly. There are already some edge solutions in the consumer sector.

Miele has launched a solution on the market called Motionreact, for example. Which the oven uses to anticipate what the user wants to do next. For example, the oven alerts you to the end of the program by emitting an acoustic signal. As the user approaches the device, two things happen at the same time. The acoustic signal falls silent and the light in the oven chamber switches on. Or, the appliance and oven chamber light switch on when approached and the main menu appears on the display. From a technical perspective, the system operates via infrared sensors in the appliance panel. They respond to movements at a distance of between approx. 20 and 40 centimetres in front of the appliance.

Edge solutions in the consumer sector

Artificial Intelligence capabilities are being integrated increasingly into consumer devices. Not only do they allow greater ease of use, operability is also improved.

An example of this is the new generation of TV top models from LG Electronics. They have intelligent processors which, thanks to the integrated AI, improve the visual quality. Using deep-learning algorithms, the TVs analyse the quality of the signal source. And accordingly choose the most suitable interpolation method for optimum picture playback. Additionally, the processor performs a dynamic fine calibration of the tone-mapping curve for HDR contents in accordance with ambient light. The picture brightness is optimised dynamically based on values for how the human eye perceives images under different lighting conditions. Even in the darkest scenes, high-contrast and detailed pictures with excellent colour depth can be reproduced. And even in rooms with high ambient brightness. The room brightness is captured by means of an ambient light sensor in the TV.

Smart devices are also of tremendous assistance when it comes to making life safer and more comfortable for the elderly. This is shown by the example neviscura from nevisQ. The discreet sensor system integrated in the apartment’s skirting boards allows falls, for example. To be detected automatically and without any additional equipment attached to the body. The nurse-call system then informs the nurse in real time.

The data from the infrared sensors is recorded in a base station with smart functions and analysed. Local data processing immediately detects critical situations in the room. With the base station acting as an interface to the call system at the same time. In the future, the AI-sensor system will also use activity analyses to detect whether a person’s condition is changing. And thus prevent critical situations.

Banking sector – one of the largest users of edge technologies

The IoT with its array of consumer wearables along with Edge Computing are also altering life outside of the home. This is especially true of how banks conduct their business.

According to a study by ResearchAndMarkets, the financial and banking sector worldwide is actually one of the biggest users of Edge Computing. Growing acceptance of digital and mobile banking initiatives and payment using wearables are significantly increasing the demand for Edge-Computing solutions. Such wearables are, for example, the Apple Watch, Fitbit or the smartphone. That’s because banking networks need to be as secure and reliable as possible in order to gain from the advantages offered by IoT technologies. But IoT devices themselves are difficult to secure. To achieve the security level required for banking applications, advanced cryptographic algorithms are needed. However, these CPU-intensive operations are extremely complex to implement, for IoT devices.

The use of security agents at the edge is therefore recommended for this reason. For example, this could be a router or a base station installed in the vicinity of the user for processing security algorithms and encrypting data from and to IoT devices. Customers can therefore complete banking transactions securely even with simpler wearables. But as use of IoT devices for processing banking transactions and payments continues to grow, so too does the need to store and process data in edge data centres. Because they are closer to the user, these micro data centres allow data to be processed closer to the source, thus reducing the response times of the system (latency) and also the costs for data transfer. Paying via smartphone is therefore just as fast as or even faster than using cash from a wallet.

Building automation

Building automation. Equipped with edge systems and AI, modern buildings not only boast unprecedented sustainability. But also provide occupants with greater convenience and comfort.

Building requirements are becoming ever more stringent. Not only are they supposed to offer comfort, convenience and safety to those who live and work in them. But also consume less and less energy and incur minimal operating costs.

In order to achieve these targets, buildings are being kitted out with an ever-wider array of technology. Building systems such as lighting, air-conditioning, heating and shading systems or monitoring technology are being interlinked via centralised building-automation systems.

Even if buildings such as these are already referred to as “smart”, they haven’t yet fully earned that moniker. After all, they still lack the capability to predict and communicate that is inherent in truly intelligent systems. Only using edge solutions can change that. In this case, it would even be possible to control all of these functions via the cloud. True real-time capability is only required by a select few building systems. Yet there is another good argument for the use of Edge Computing in this context. The systems and sensors in the building gather a vast amount of data. Which not only relates to technical components, but also to users.

For reasons of data protection, it is therefore an advantage when the majority of the data processing required takes place in edge nodes. In this way, data remains private – and access is independent of the respective cloud connection’s availability. Nonetheless, a hybrid approach (i.e., the combination of edge- and cloud-based computing) will yield the best results in building automation. For example, weather information from the cloud could be used to control the air conditioning. Or the building in question could be compared with others to identify potential for improvement.

Bloomberg’s new headquarters

Only by pursuing such approaches can the most sustainable buildings become a reality. These include the new headquarters of media company Bloomberg, which is one of the most sustainable buildings in the world. In comparison to a typical office block, the building in London consumes approximately 73 per cent less water. While energy consumption and the associated CO₂ emissions are 35 per cent lower. Innovative energy, lighting, water and ventilation systems account for the majority of the energy savings. Many of these solutions are unique and enable the building to recover waste. Additionally, react to changes in the surrounding area and adapt to the way it is used by people.

Norman Foster, founder and Executive Chairman of Foster + Partners, outlines the key features of the building designed by his firm: “The deep-plan interior spaces are naturally ventilated through a ‘breathing’ façade. A top-lit atrium edged with a spiralling ramp at the heart of the building ensures a connected and healthy environment.” In moderate ambient temperatures, the striking, external bronze blades open and close. Meaning that the building can operate in a “breathable” natural ventilation mode. This reduces energy consumption considerably. Smart CO2-sensor controls allow air to be distributed according to the approximate number of people. Present in each zone of the building at a specific point in time. The ability to adapt the flow of air dynamically to the occupancy hours and patterns will save around 600 to 750 megawatt-hours of electricity each year and therefore reduce annual CO2 emissions by around 300 metric tons.

Building automation in cube berlin

One other smart commercial building is the cube berlin. The main goal of the concept drawn up by architects 3XN and brought to fruition by CA Immo is not its spectacular aesthetic form, but rather its artificial intelligence – the so-called “brain” of the building.

CA Immo commissioned start-up Thing Technologies to program the AI. They produced a system that intelligently interlinks all technical systems, sensors and planning, operating and user data, not to mention exerting optimised control over the processes in the building. The “brain” learns from data about operation, users and the environment, then uses this information to come up with suggestions for improvement. For example, unused areas will require neither heating nor cooling, ventilation or light in future. The control system will recognise this accordingly and shut off the relevant systems in these areas. Additionally, tenants in cube berlin can use a specially developed app to control aspects such as the room climate, access controls, parcel station, and much more besides. Users and their needs are at the forefront of the development process.

As such, smart buildings are an entirely new type of commercial property that puts users and their needs first. Thanks to edge computing and AI, this enables an interaction between humans and buildings that was previously impossible.

What is an Adamm?

Adamm, Orb and Co. Not only can wearables today record vital data thanks to edge technologies. They can also analyse this information instantly. Smart assistants support employees in healthcare in an incredible variety of ways. Central structures in patient healthcare are reaching their limits when it comes to mastering the challenges in the healthcare sector. Due to a lack of trained personnel, chronic diseases and a general need for greater efficiency. Intelligence is increasingly migrating to the edge.

The healthcare sector is facing enormous challenges, because costs are exploding worldwide. Market researchers at Deloitte expect that the global health spend in 2022 will rise to 10 billion dollars. In the year 2017 this figure was 7.7 billion US dollars. Chronic diseases are arising with increasing frequency. According to the World Health Organisation (WHO), 13 million people worldwide die each year before reaching the age of 70. Causes are cardiovascular diseases, chronic respiratory diseases, diabetes and cancer. What’s more, it is becoming increasingly difficult to find the right personnel who can offer medical services on a comprehensive basis.

Wearables analyse vital parameters

One solution for overcoming these challenges is to use IoT edge devices and their underlying computer architectures. For example, wearable edge devices can collect, store and analyse critical patient data. Without having to be in constant contact with a network infrastructure. Such medical products therefore help diagnoses to be made quickly and easily. Everything without the patient necessarily having to attend a medical practice or a hospital. In addition, the information collected can be sent at regular intervals to the central servers in the cloud. Where it is checked by the attending physician or stored for long-term diagnoses.

Warning of asthma attacks with Adamm

One example is Adamm – a wearable intelligent asthma monitor that detects the symptoms of an asthma attack before it happens. The wearer can therefore take action before the situation deteriorates. The sensors in the wearable detect the patient’s individual symptoms. They monitor the cough rate, breathing pattern, heartbeat, temperature and other relevant data.

The asthma monitor uses algorithms to learn the patient’s “normal condition”. Its ability to detect when an attack is indicated therefore improves continually over time. All of the data is processed on the device itself. Whenever the data deviates from the patient’s individual norm, the wearable vibrates and thus informs the patient about the deviation. At the same time, Adamm can also send an SMS to a previously nominated nurse or person of trust. The device is not dependent on the computing power of a smartphone and therefore offers true autonomy. However, Adamm can send data as needed to an app or a web portal.

Support for emergency call centres

AI-based edge devices not only offer assistance in terms of monitoring patient data: the Danish company Corti developed a system, for example, which supports operations managers in emergency stations. Orb is a real-time decision-making system, which uses AI technology to identify important patterns in the incoming emergency call. It warns the dispatcher of events that require the swiftest possible response, such as a heart attack for example.

The device is simply placed on the dispatcher’s table for this purpose. It connects to the audio stream of the telephone in order to monitor emergency calls. It is not being explicitly preprogrammed with sample events. Because, the AI algorithm learns to identify key factors by listening to large numbers of calls. The system also considers non-verbal sounds, which can supply important information. Edge Computing not only offers the advantage of a very fast response, as Corti co-founder and CTO Lars Maaløe stresses: “Efficiency is crucial for edge devices – particularly in an emergency setting. And Edge Computing has the significant benefit of allowing the Orb to function continuously. Even when the internet connection is interrupted.”

By listening in on the phone, Orb should reduce the number of undiscovered heart attacks by more than 50 per cent. And detect within 50 seconds whether a heart attack has happened. This represents an important time gain since from collapse to start of resuscitation. The chance of the casualty surviving drops by 10 per cent per minute. The dispatchers could therefore urgently do with some extra assistance in order to detect a cardiac arrest quickly and efficiently.

Enhanced MRI images

Edge technology also helps to improve services for patients in the hospital. For example, edge computing allows faster recording times, improved image quality and fewer discrepancies using magnetic resonance imaging machines from GE Healthcare. The MRI machines have embedded high-performance graphics processors for this purpose. Together with AIRx, an AI-based automated workflow tool for performing MRI brain scans, they enable automatic identification of anatomical structures. The system then independently determines the cutting point and angle of visual recordings for neurological examinations. This leads to fewer recording errors and significantly reduces the time the patient spends in the MRI machine.

AIRx is based on Edison, a General Electric platform, which is intended to accelerate the development and roll-out of AI technology. Edison can integrate and assimilate data from different sources, apply advanced analyses and AI to transform data and generate appropriate findings on this basis. “AI is fundamental to achieving precision health and must be pervasively available from the cloud to the edge and directly on medical devices”, stresses Dr. Jason Polzin, General Manager of MR Applications, GE Healthcare. “Real-time, critical-care use cases demand AI at the edge.”

From pure fiction to a real market opportunity

Intelligent machines and self-teaching computers will open up exciting prospects for the electronics industry.

The idea of thinking, or even feeling, machines was long merely a vision of science-fiction authors. But thanks to rapid developments in semiconductors and new ideas for the programming of self-teaching algorithms, Artificial Intelligence (AI) is today a real market, opening up exciting prospects for businesses.

According to management consultants McKinsey, the global market for AI-based services, software and hardware is set to grow by as much as 25 per cent a year, and is projected to be worth USD 130 billion by 2025. Investment in AI is therefore booming, as the survey “Artificial Intelligence: the next digital frontier” by the McKinsey Global Institute affirms. It reports that, in the last year, businesses – primarily major tech corporations such as Google and Amazon – spent as much as USD 27 billion on in-house research and development in the field of intelligent robots and self-teaching computers. A further USD 12 billion was invested in AI in 2016 externally – that is, by private equity companies, by risk capital funds, or through mergers and acquisitions. This amounted in total to some USD 39 billion – triple the volume seen in 2013. Most current external investments (about 60 per cent) is flowing into machine learning (totalling as much as USD 7 billion). Other major areas of investment include image recognition (USD 2.5 to 3.5 billion) and voice recognition (USD 600 to 900 million).

Intelligent machines and self-teaching computers are opening up new market opportunities in the electronics industry. Market analyst TrendForce predicts that global revenues from chip sales will increase by 3.1 per cent a year between 2018 and 2022. It is not just the demand for processors that is rising, however; applications of AI are also driving new solutions in electronics fields such as sensor technology, hardware accelerators and digital storage media. Market research organisation marketsandmarkets, for example, forecasts a rise from USD 2.35 billion in 2017 to USD 9.68 billion by 2023 – among other reasons as a result of big data, the Internet of Things, and applications relating to Artificial Intelligence. The creation of AI-based services is also increasing demand for higher-performance network infrastructures, data centres and server systems.

AI is thus a vital market for the electronics industry as well. With our semiconductor solutions, experienced experts and extensive partner network, we will be glad to help you develop exciting new products.

Sensors as a basis for AI

Sensor fusion allows increasingly accurate images of the environment to be developed by fusing data from different sensors. To achieve faster results and reduce the flood of data, the sensors themselves are becoming intelligent too.

Systems with Artificial Intelligence need data. The more data, the better the results. This data can either originate in databases – or it can be recorded using sensors. Sensors measure vibrations, currents and temperatures on machines, for example, and thus provide an AI system with information for predicting when maintenance is due. Others – integrated in wearables – record pulse, blood pressure and perhaps blood sugar levels in people in order to draw conclusions regarding the state of health.

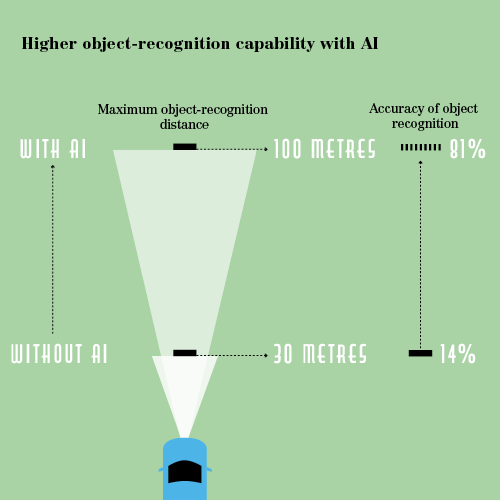

Sensor technology has gained considerable momentum in recent years from areas such as mobile robotics and autonomous driving: for vehicles to move autonomously through an environment, they have to recognise the surroundings and be able to determine the precise position. To do this, they are equipped with the widest array of sensors: ultrasound sensors record obstacles at a short distance, for example when parking. Radar sensors measure the position and speed of objects at a greater distance. Lidar sensors (light detection and ranging) use invisible laser light to scan the environment and deliver a precise 3D image. Camera systems record important optical information such as the colour and contour of an object and can even measure the distance over the travel time of a light pulse.

More information is needed

Attention today is no longer focusing solely on the positioning of an object, rather information such as orientation, size or also colour and texture is also becoming increasingly important. Various different sensors have to work together to ensure this information is captured reliably. That’s because every sensor system offers specific advantages. However, it is only by fusing the information from the different sensors – in a process known as sensor fusion – that a precise, complete and reliable image of the surroundings is generated. A simple example of this are motion sensors, such as those used in smartphones, among other devices: only by combining accelerometer, magnetic field recognition and gyroscope can these sensors measure the direction and speed of a movement.

Sensors are also becoming intelligent

Not only can modern sensor systems deliver data for AI, they can also use it: such sensors can therefore pre-process the measurement data and thus ease the burden on the central processor unit. The AEye start-up developed an innovative hybrid sensor, for example, which combines camera, solid-state lidar and chips with AI algorithms. It overlays the 3D pixel cloud of the lidar with the camera’s 2D pixels and thus delivers a 3D image of the environment in colour. The relevant information is then filtered from the vehicle’s environment using AI algorithms and evaluated. The system is not only more precise by a factor of 10 to 20 and three times faster than individual lidar sensors, it also reduces the flood of data to central processor units.

Sensors supply a variety of information to the AI system

- Vibration

- Currents

- Temperature

- Position

- Size

- Colour

- Texture

- and much more…

AI smarter than humans?

What began in the 1950s with a conference has grown into a key technology. It is already influencing our lives today, and as the intelligence of machines increases in the future that influence is bound to spread much more. But is AI smarter than humans?

Smart home assistants order products online on demand. Chatbots talk to customers with no human intermediary. Self-driving cars transport the occupants safely to their destination, while the driver is engrossed in a newspaper. All of those are applications that are already encountered today in our everyday lives – and they all have something in common: they would not be possible without Artificial Intelligence.

Artificial Intelligence is a key technology which, in the years ahead, will have a major impact not only on our day-to-day lives but also on the competitiveness of the economy as a whole. “Artificial Intelligence has enormous potential for improving our lives – in the healthcare sector, in education, or in public administration, for example. It offers major opportunities to businesses, and has already attained an astonishingly high degree of acceptance among the public at large,” says Achim Berg, president of industry association Bitkom.

How everything began

Developments in this technology began as far back as the 1950s. The term Artificial Intelligence was actually coined even before the technology had truly existed, by computer scientist John McCarthy at a conference at Dartmouth University in the USA in 1956. The US government became aware of AI, and saw potential advantages from it in the Cold War, so it provided McCarthy and his colleague Marvin Minsky with the financial resources necessary to advance the new technology. By 1970, Minsky was convinced: “In from three to eight years, we will have a machine with the general intelligence of an average human being.” But that was to prove excessively optimistic. Scientists around the world made little progress in advancing AI, so governments began cutting funding. A kind of AI winter closed in. It was only in 1980 that efforts to develop intelligent machines picked up pace again. They culminated in a spectacular battle: in 1997, IBM’s supercomputer Deep Blue defeated chess world champion Garry Kasparov.

Bots are better gamers

AI began to advance rapidly from then on. Staying with games of a kind, the progress being made was demonstrated by the victory of a bot developed by OpenAI against a team of professional players in the multiplayer game Dota 2 – one of the most complex of all video games. What was so special about this triumph was that the bot taught itself the game in just four months. By continuous trial and error over huge numbers of rounds played against itself, the bot discovered what it needed to do to win. The bot was only set up to play a one-to-one game, however – normally two teams of five play against each other. Creating a team of five bots is the OpenAI developers’ next objective. OpenAI is a non-profit research institute founded by Elon Musk with the stated aim of developing safe AI for the good of all humanity.

Intelligence doubled in two years

So, is AI already as clever as a person today? To find that out, researchers headed by Feng Liu at the Chinese Academy of Science in Beijing devised a test which measures the intelligence of machines and compares it to human intelligence. The test focused on digital assistants such as Siri and Cortana. It found that the cleverest helper of all is the Google Assistant. With a score of 47.28 points, its intelligence is ranked just below that of a six year-old human (55.5 points). That’s pretty impressive. But what is much more impressive is the rate at which the Google Assistant is becoming more intelligent. When Feng Liu first conducted the test back in 2014, the Google Assistant scored just 26.4 points – meaning it almost doubled its intelligence in two years. If the system keeps on learning at that rate, it won’t be long before Minsky’s vision expressed back in 1970 of a machine with the intelligence of a human adult becomes reality.

Simulating a human

Surprisingly, despite the long history of the development of intelligent machines, there is still no scientifically recognised definition of AI today. The term is generally used to describe systems which simulate human intelligence and behaviour. The most fitting explanation comes from renowned MIT professor Marvin Minsky, who defined AI as “the science of making machines do those things that would be considered intelligent if they were done by people”.

Artficial Intelligence | Startups

A look at the start-up scene reveals the diversity of the areas in which AI can be used. These young companies are developing products for industries as varied as healthcare, robotics, finance, education, sports, safety, and many more. We present a small selection of interesting start-ups here.

Connected Cars for Everyone

In the form of Chris, the start-up German Autolabs provides an assistant designed specifically for motorists, which easily and conveniently provides access to their smartphone via smart speech recognition and gesture control, including while driving. Chris can be integrated with any vehicle, regardless of its model and year of manufacture. The aim is to make connected car technology available to all through the combination of a flexible and scalable assistant software and hardware for retrofitting.

Make Your Own Voice Assistant

Snips is developing a new voice platform for hardware manufacturers. The service, based on Artificial Intelligence, is intended to allow developers to embed voice assistance services on any device. At the same time, a consumer version is due to be provided over the Internet, running on Raspberry Pi-powered devices. Privacy is at the forefront of this, with the system sending no data to the cloud and operating completely offline.

Realistic Simulation for Autonomous Driving Systems

Automotive Artificial Intelligence offers a virtual 3D platform which realistically imitates cars’ driving environment. It is intended to be used as a means of testing software for fully automated driving, helping to explore the systems’ limits. Self-learning agents provide the reality needed in the virtual platform. Aggressive drivers turn up just as often as overcautious ones, and there are arbitrary lane changes just as there are unforeseeable braking manoeuvres from other (simulated) vehicles that are part of the traffic.

Feeding Pets More Intelligently

With SmartShop Beta, Petnet provides a digital marketplace that guides dog and cat owners towards suitable food for their pets using Artificial Intelligence –

depending on their breed and specific needs. The start-up has itself also developed the Petnet SmartFeeder for the feeding pets. This allows the pets to be automatically supplied with individual portions. The system gives notifications for successful feeds and when food levels are low. An automatic repeat order can also be set up in the SmartShop.

Smart Water Bottle

Bellabeat has already successfully brought Healthtracker to market in the form of women’s jewellery. Building on this, the start-up has developed an intelligent water bottle with Spring. By way of sensors, its system can record how much water the user drinks, how active she is, how much she sleeps, or her stress sensitivity levels. An app, with the assistance of special AI algorithms, is used to analyse users’ individual hydration needs and give a recommendation for fluid intake.

Drone for the Danger Zone

Hivemind Nova is a quadrocopter for law enforcement, first responder and security applications. The drone learns from experience how to negotiate restricted areas or hazardous environments. Without a pilot remote-controlling, it autonomously explores dangerous buildings, tunnels, and other structures before people enter them. It transmits HD video and a map of the building layout to the user live. Hivemind Nova learns and continuously improves over time. The more it is used, the more capable it becomes.

Detecting Wear Ahead of Time

Konux combines smart sensors and analysis based on Artificial Intelligence. The solution is used on railways to monitor sets of points, for example. Field data, already pre-processed by sensor, is wirelessly transmitted to an analysis platform and combined with other data sources such as timetables, meteorological data, and maintenance logs. The data is then analysed using machinelearning algorithms to detect operational anomalies and critical wear in advance.

Greater Success with Job Posts

Textio is an advanced writing platform for creating highly effective job advertisements. By analysing the hiring outcomes of more than 10 million job listings per month, Textio predicts the impact of a job post and gives instructions in real time as to how the text could be improved. To do this, the company uses a highly sophisticated predictive engine and makes it usable for anyone – no training or IT integration needed.

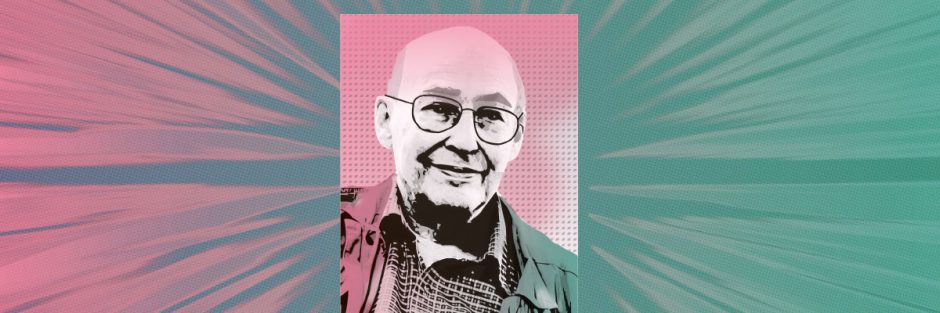

AI-pioneer Minsky: Temporarily dead?

The brain functions like a machine, or so according to the theory of Marvin Minsky, one of the most important pioneers of artificial intelligence. In other words, it can be recreated – made immortal by backing up its consciousness onto a computer.

Could our entire life simply be a computer simulation, like “the Matrix” from the Hollywood blockbuster of the same name? According to Marvin Minsky, this is entirely conceivable: “It’s perfectly possible that we are the production of some very powerful complicated programs running on some big computer somewhere else. And there’s really no way to distinguish that from what we call reality.” Such thoughts were typical of the mathematician, cognition researcher, computer engineer and great pioneer of Artificial Intelligence. Minsky combined science and philosophy scarcely any other, questioned conventional views – but always with a strong sense of humour:

“No computer has ever been designed that is ever aware of what it’s doing; but most of the time, we aren’t either.”

Born in 1927 in New York, Minsky studied mathematics at Harvard University and received a PhD in mathematics from Princeton University. He was scarcely 20 years old when he began to take an interest in the topic of intelligence: “Genetics seemed to be pretty interesting because nobody knew yet how it worked,” recalled Minsky at the time in an article that appeared in the “New Yorker” in 1981. “But I wasn’t sure that it was profound. The problems of physics seemed profound and solvable. It might have been nice to do physics. But the problem of intelligence seemed hopelessly profound. I can’t remember considering anything else worth doing.”

Great intelligence is the sum of many non-intelligent parts

Back then, as a youthful scientist, he laid the foundation stone for a revolutionary theory, which he expanded on during his time at the Massachusetts Institute of Technology (MIT) and which finally led to him becoming a pioneer in Artificial Intelligence: Minsky held the view that the brain works like a machine and can therefore basically be replicated in a machine too. “The brain happens to be a meat machine,” according to one of his frequently quoted statements. “You can build a mind from many little parts, each mindless by itself.” Marvin Minsky was convinced that consciousness can be broken down into many small parts. His aim was to identify such components of the mind and understand them. Minsky’s view that the brain is built up from the interactions of many simple parts called “agents” is the basis of today’s neural networks.

Together with his Princeton colleague John McCarthy, he continued to develop the theory and gave the new scientific discipline a name at the Dartmouth Conference in 1956: Artificial Intelligence. Together McCarthy and Minsky founded the MIT Artificial Intelligence Laboratory some three years later – the world’s most important research centre for Artificial Intelligence ever since. Many of the ideas developed there were later seized on in Silicon Valley and translated into commercial applications.

Answerable for halting research

What is interesting is that the father of Artificial Intelligence was responsible for research into the area being halted for many years: Minsky had experimented himself with neural networks in the 1960s, but renounced them in his book “Perceptrons”. Together with his co-author Seymour Papert, he highlighted the limitations of these networks – and thus brought research into this area to a standstill for decades. Most of these limitations have since been overcome, and neural networks are a core technology for AI in the present day.

However, research into AI was by far not the only work area that occupied Marvin Minsky. His Artificial Intelligence Laboratory is regarded as the birthplace for the idea that digital information should be freely available – a theory from which open-source philosophy later emerged. The institute contributed to the development of the Internet, too. Minsky also had an interest in robotics, computer vision and microscopy – his inventions in this area are still used today.

Problems of mankind could be resolved

Minsky viewed current developments in AI quite critically, as he felt they were not focused enough on creating true intelligence. In contrast to the alarmist warnings of some experts that intelligent machines would take control in the not too-distant future, Minsky most recently advocated a more philosophical view of the future: machines that master real thinking could demonstrate ways to solve some of the most significant problems facing mankind. Death may also have been at the back of his mind in this respect: He predicted that people could make themselves immortal by transferring their consciousness from the brain onto chips. “We will be immortal in this sense,” according to Minsky. When a person grows old, they simply make a backup copy of their knowledge and experience on a computer. “I think, in 100 years, people will be able to do that.”

AI-Pioneer Minsky only temporarily dead

Marvin Minsky died in January 2016 at the age of 88. Although perhaps only temporarily: shortly before his death, he was one of the signatories of the Scientists’ Open Letter on Cryonics – the deep-freezing of human bodies at death for thawing at a future date when the technology exists to bring them back to life. He was also a member of the Scientific Advisory Board of cryonics company Alcor. It is therefore entirely possible that Minsky’s brain is waiting, shock-frozen to be brought back to life at some time in the future as a backup on a computer.

Faster to intelligent IoT products

Developers working on products with integrated AI for the internet of things need time and major resources. This type of product typically requires up to 24 months until it is marketable. With a pre-industrialised software platform, Octonion now plans to reduce this time to only six months.

Smaller companies that want to realise products for the Internet of Things often lack the resources for electronics and software development. In addition, it takes lots of time to put together the building blocks required: connectivity, AI, sensor integration, etc. For example, the time to market for typical IoT projects is between 18 and 24 months – a very long time in the fast-paced world of the Internet.

A new solution from Octonion can help. The Swiss firm has developed a software platform for interconnecting objects and devices and equipping them with AI functions.

With this complete solution, IoT projects with integrated AI can be realised within six to eight months.

From the device to the cloud

Octonion provides a true end-to-end software solution, from an embedded device layer to cloud-based services. They include Gaia, a highly intelligent, autonomous software framework for decision-making that uses modern -machine-learning methods for pattern recognition. The system can be used for a wide range of applications in a variety of sectors. What’s more it guarantees that the data generated by the IoT device belongs to the customer only, who is also the sole project operator.

Reduce costs and development time

The result is a complete IoT system with Artificial Intelligence that provides a full solution from IoT devices or sensors and a gateway to the cloud. Since the individual platform levels are device-independent and compatible with all hosting solutions, customers can realise all their applications. Developers can select the functional module they require on each level. This enables them to adjust the platform to their individual requirements, providing the perfect conditions for developing and operating proprietary IoT solutions quickly and easily. With the Octonion platform, proprietary IoT solutions are marketable in only six months.

AI better than the doctor?

Cognitive computer assistants are helping clinicians to make diagnostic and therapeutic decisions. They evaluate medical data much faster, while delivering at least the same level of precision. It is hardly surprising, therefore, that, applications with Artificial Intelligence are being used more frequently.

Hospitals and doctors’ surgeries have to deal with huge volumes of data: X-ray images, test results, laboratory data, digital patient records, OR reports, and much more. To date, they have mostly been handled separately. But now the trend is towards bringing everything into a single unified software framework. This data integration is not only enabling faster processing of medical data and creating the basis for more efficient interworking between the various disciplines. It is also promising to deliver added value. New, self-learning computing algorithms will be able to detect hidden patterns in the data and provide clinicians with valuable assistance in their diagnostic and therapeutic decision-making.

Better diagnosis thanks to Artificial Intelligence: 30 times faster than a doctor with an error rate of 1%.

Source: PwC

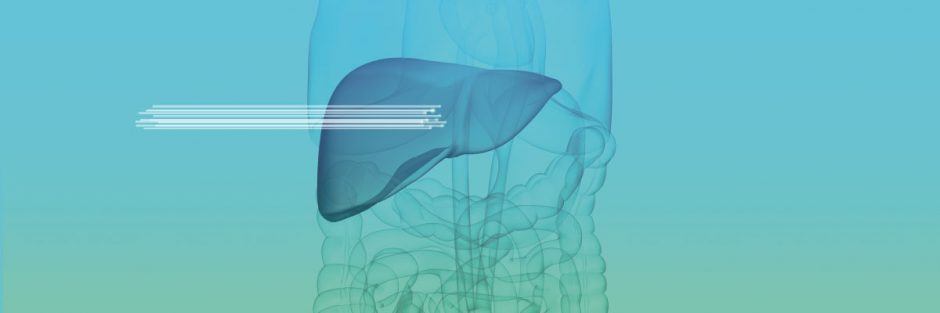

Analysing tissue faster and more accurately

“Artificial Intelligence and robotics offer enormous benefits for our day-to-day work,” asserts Prof. Dr Michael Forsting, Director of the Diagnostic Radiology Clinic of the University Hospital in Essen. The clinic has used a self-learning algorithm to train a system in lung fibrosis. After just a few learning cycles, the computer was making better diagnoses than a doctor: “Artificial Intelligence is helping us to diagnose rare illnesses more effectively, for example. The reasons are that – unlike humans – computers do not forget what they have once learned, and they are better than the human eye at comparing patterns.”

Especially in the processing of image data, cognitive computer assistants are proving helpful in relieving clinicians of protracted, monotonous and recurring tasks, such as accurately tracing the outlines of an organ on a CT scan. The assistants are also capable of filtering information from medical image data that a clinician would struggle to identify on-screen.

Artificial Intelligence diagnosis – Better than the doctor

These systems are now even surpassing humans, as a study at the University of Nijmegen in the Netherlands demonstrates: the researchers assembled two groups to test the detection of cancerous tissue. One comprised 32 -developer teams using dedicated AI software solutions; the other comprised twelve pathologists. The AI developers were provided in advance with 270 CT scans, of which 110 indicated dangerous nodes and 160 showed healthy tissue. These were intended to aid them in training their systems. The result: the best AI system attained virtually 100 per cent detection accuracy and additionally colour-highlighted the critical locations. It was also much faster than a pathologist, who took 30 hours to detect the infected samples with corresponding precision. Most notably, the clinicians overlooked metastases less than 2 millimetres in size under time pressure. Only seven of the 32 AI systems were better than the pathologists, however.

The systems involved in the test are in fact not just research projects, but are already in use. In fibrosis research at the Charité hospital in Berlin, for example, where it is using the Cognitive Workbench from a company called ExB to automate the highly complex analysis of tissue samples for the early detection of pathological changes. The Cognitive Workbench is a proprietary, cloud-based platform which enables users to create and train their own AI-capable analyses of complex unstructured and structured data sources in text and image form. Ramin Assadollahi, CEO and Founder of ExB, states: “In addition to diagnosing hepatic fibrosis, we can bring our high-quality deep-learning processes to bear in the early detection of melanoma and colorectal cancers.”

Cost savings for the healthcare system

According to PwC, AI applications in breast cancer diagnoses mean that mammography results are analysed 30 times faster than by a clinician – with an error rate of just one per cent. There are prospects for huge progress, not only in diagnostics. In a pilot study, Artificial Intelligence was able to predict with greater than 70 per cent accuracy how a patient would respond to two conventional chemotherapy procedures. In view of the prevalence of breast cancer, the PwC survey reports that the use of AI could deliver huge cost savings for the healthcare system. It estimates that over the next 10 years, cumulative savings of EUR 74 billion might be made.

Digital assistants for patients

AI is also benefiting patients in very concrete ways to overcome a range of difficulties in their everyday lives, such as visual impairment, loss of hearing or motor diseases. The “Seeing AI” app, for example, helps the visually impaired to perceive their surroundings. The app recognises objects, people, text or even cash on a photo that the user takes on his or her smartphone. The AI-based algorithm identifies the content of the image and describes it in a sentence which is read out to the user. Other examples include smart devices such as the “Emma Watch”, which intelligently compensates for the tremors typical to Parkinson’s disease patients. Microsoft developer Haiyan Zhang developed the smart watch for graphic designer Emma Lawton, who herself suffers from Parkinson’s. More Parkinson’s patients will be provided with similar models in future.

Chips driving Artificial Intelligence

From the graphics processing unit through neuromorphic chips to the quantum computer – the development of Artificial Intelligence chips is supporting many new advances.

AI-supported applications must keep pace with rapidly growing data volumes and often have to respond simultaneously in real time. The classic CPUs that you will find in every computer quickly reach their limits in this area because they process tasks sequentially. Significant improvements in performance, particularly in the context of deep learning, would be possible if the individual processes could be executed in parallel.

Hardware for parallel computing processes

A few years, ago, the AI sector focused its attention on the graphics processing unit (GPU), a chip that had actually been developed for an entirely different purpose. It offers a massive parallel architecture, which can perform computing tasks in parallel using many smaller yet still efficient computer units. This is exactly what is required for deep learning. Manufacturers of graphics processing units are now building GPUs specifically for AI applications. A server with just one of these high-performance GPUs has a throughput 40 times greater than that of a dedicated CPU server.