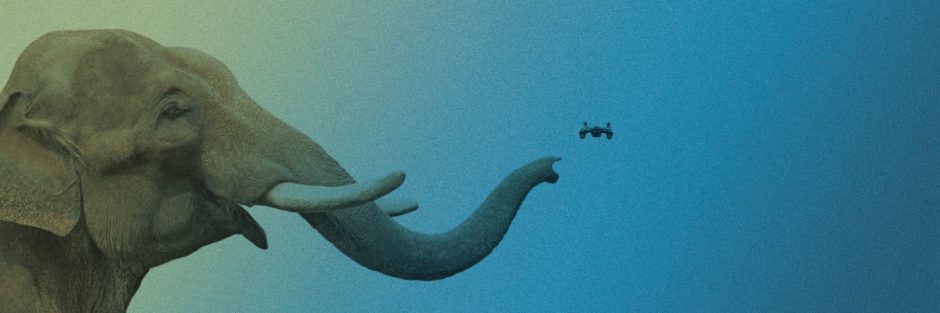

The concurrent development and design of chips and software has enabled researchers to realise chips that are not only uncommonly small and energy-efficient. But also boast powerful AI capabilities extending right through to training. One possible application scenario might be in nano drones that can navigate their way through a room independently.

To the untrained eye, the chip does not look any different from the ones found in any ordinary electronic device. Yet the chips developed by the Massachusetts Institute of Technology (MIT) and named Eyeriss and Navion are a revelation. And might just be the key to the future of artificial intelligence. Using these chips, even the most diminutive IoT devices could be equipped with powerful “smart” abilities. The likes of which have only been able to be provided from gigantic data centres until now.

Energy efficiency is vital

Professor Vivienne Sze from the MIT Department of Electrical Engineering and Computer Science (EECS) – and a member of the development team – is keen to emphasise that the real opportunity offered by these chips is not in their impressive capability for deep learning. It is much more about their energy efficiency. The chips need to master computationally intensive algorithms and make do with the energy available on the IoT devices themselves. However, this is the only way that AI can find widespread application on the “edge”. The performance of the Eyeriss chip is 10 or even 1,000 times more efficient than current hardware.

A symbiosis of software and hardware

In Professor Sze’s lab, research is also underway to determine how software ought to be designed to fully harness the power of computer chips. A low-power chip called Navion was developed at MIT to answer this question. To name one potential application, this chip could be used to navigate a drone using 3D-maps with previously unthinkable efficiency. But above all, such a drone is minute and no larger than a bee.

The concurrent development of the AI software and hardware was a crucial in this instance. This enabled researchers to build a chip just 20 square millimetres in size in the form of Navion. This chip only requires 24 milliwatts of power. That is around a thousandth of the energy consumed by a light bulb. The chip can use this tiny amount of power to process up to 171 camera images per second. Additionally, it perform inertial measurements – all in real time. Using this data, it calculates its position in the room. It might also be conceivable for the chip to be incorporated into a small pill that could gather and evaluate data from inside the human body once swallowed.

The chip achieves this level of efficiency through a variety of measures. For one thing, it minimises the data volume – taking the form of camera images and inertial measurements. That is stored on the chip at any given point in time. The developer team was also able to physically reduce the bottleneck. Between the data’s storage location and the location in which they are analysed. Not to mentioned coming up with clever schemes for the data to be re-used. The way that these data flow through the chip has also been optimised. Certain computation steps are also skipped, such as the computation of zeros that result in a zero.

A basis for self-learning, miniaturised electronics

Research into how AI can be more effectively integrated into edge devices is also being conducted in other institutes. Accordingly, a team of researchers at the Fraunhofer Institute for Microelectronic Circuits and Systems (IMS) has developed a kind of artificial intelligence for microcontrollers and sensors. That comprises a fully configurable, artificial neural network.

This solution – called AIfES – is a platform-independent machine-learning library. Using which self-learning, miniaturised electronics that do not require any connection to cloud infrastructure or powerful computers can be realised. The library constitutes a fully configurable, artificial neural network. This network can also generate appropriately deep networks for deep learning if needed. The source code has been reduced to a minimum, meaning that the AI can even be trained on the microcontroller itself. Thus far, this training phase has only been possible in data centres. AIfES is not concerned with processing large data volumes. Instead, it is now much more a case of the strictly required data being transferred in order to set up very small neural networks.

What is AlfES?

The team of researchers has already produced several demonstrators, including one on a cheap 8-bit microcontroller for detecting hand-written numbers. An additional demonstrator can detect complex gestures and numbers written in the air. For this application, scientists at the IMS developed a detection system comprising a microcontroller and an absolute orientation sensor. To begin with, multiple people are required to write the numbers from zero to nine several times over. The neural network detects these written patterns, learns them and autonomously identifies them in the next step.

Studies conducted at the research institutes provide an outlook for how AI software and hardware will continue to develop symbiotically in the future and open up complex AI functions in IoT and edge devices in the process.