In just a few years, every new vehicle will be fitted with electronic driving assistants. They will process information from both inside the car and from its surrounding environment to control comfort and assistance systems.

We are teaching cars to negotiate road traffic autonomously,” reports Dr Volkmar Denner, Chairman of the Board of Bosch. “Automated driving makes the roads safer. Artificial Intelligence is the key. Cars are becoming smart,” he asserts. As part of those efforts, the company is currently developing an on-board vehicle computer featuring AI. It will enable self-driving cars to find their way around even complex environments, including traffic scenarios that are new to it.

Transferring knowledge by updates

The on-board AI computer knows what pedestrians and cyclists look like. In addition to this so-called object recognition, AI also helps self-driving vehicles to assess the situation around them. They know, for example, that an indicating car is more likely to be changing lane than one that is not indicating. This means self-driving cars with AI can detect and assess complex traffic scenarios, such as a vehicle ahead turning, and apply the information to adapt their own driving. The computer stores the knowledge gathered while driving in artificial neuronal networks. Experts then check the accuracy of the knowledge in the laboratory. Following further testing on the road, the artificially created knowledge structures can be downloaded to any number of other on-board AI computers by means of updates.

Assistants recognising speech, gestures and faces

Bosch is also intending to collaborate with US technology company Nvidia on the design of the central vehicle computer. Nvidia will supply Bosch with a chip holding the algorithms for the vehicle’s movement created through machine learning. As Nvidia founder Jensen Huang points out, on-board AI in cars will not only be used for automated driving: “In just a few years, every new vehicle will have AI assistants for speech, gesture and facial recognition, or augmented reality.” In fact, the chip manufacturer has also been working with Volkswagen on the development of an intelligent driving assistant for the electric microvan I.D.Buzz. It will process sensor data from both inside the car and from its surrounding environment to control comfort and assistance systems. These systems will be able to accumulate new capabilities in the course of further developments in autonomous driving. Thanks to deep learning, the car of the future will learn to assess situations precisely and analyse the behaviour of other road users.

3D recognition using 2D cameras

Key to automated driving is creating the most exact map possible of the surrounding environment. The latest camera systems are also using AI to do that. A project team at Audi Electronics Venture, for example, has developed a mono camera which uses AI to generate a high-precision three-dimensional model of the surroundings. The sensor is a standard, commercially available front-end -camera. It captures the area in front of the car to an angle of about 120 degrees, taking 15 frames per second at a 1.3 megapixel resolution. The images are then processed in a neuronal network. That is also where the so-called semantic segmentation takes place. In this process, each pixel is assigned one of 13 object classes. As a result, the system is able to recognise and distinguish other cars, trucks, buildings, road markings, people and traffic signs. The system also uses neuronal networks to gather distance information. This is visualised by so-called ISO lines – virtual delimiters which define a constant distance. This combination of semantic segmentation and depth perception creates a precise 3D model of the real environment. The neuronal network is pre-trained through so-called unsupervised learning, having been fed with large numbers of videos of road scenarios captured by a stereo camera. The network subsequently learned autonomously to understand the rules by which it generates 3D data from the mono camera’s images.

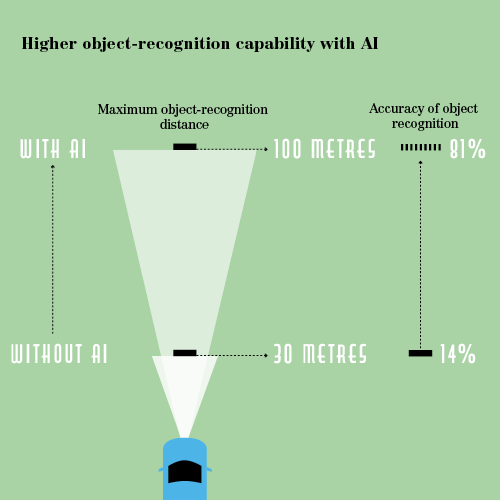

Mitsubishi Electric has also developed a camera system that uses AI. It will warn drivers of future mirrorless vehicles of potential hazards and help avoid accidents, especially when changing lane. The system uses a new computing model for visual recognition that copies human vision. It does not capture a detailed view of the scene as a whole, but instead focuses rapidly on specific areas of interest within the field of vision. The relatively simple visual recognition algorithms used by the AI conserve the system resources of the on-board computer. The system is nevertheless able to distinguish between object types such as pedestrians, cars and motorcycles. Compared to conventional camera-based systems, the technology will be able to significantly extend the maximum object recognition range from the current approximately 30 metres to 100 metres. It will also be able to improve the accuracy of object recognition from 14 percent to 81 per cent.

AI is becoming a competitive factor

As intelligent assistance systems are being implemented ever more frequently, AI is becoming a key competitive factor for car manufacturers. That is true with regard to the use of AI for autonomous driving as well as in the development of state-of-the-art mobility concepts based on AI. According to McKinsey, almost 70 per cent of customers are already willing to switch manufacturer today in order to gain better assisted and autonomous driving features. The advice from Dominik Wee, Partner at McKinsey’s Munich office, is: “Premium manufacturers, in particular, need to demonstrate to their highly demanding customers that they are technology leaders in AI-based applications as in other areas – for example in voice-based interaction with the vehicle, or in finding a parking space.”